This entry started as the first in my next series that I planned about reporting with Mendix, but when capturing my screenshots I realized my example was so poorly designed in a working, production application that I needed to address it first. While I don’t have the story prioritized to actually do the work to fix it versus competing higher priority issues, I’m going to explain what I did wrong in one of the first processes I ever wrote in Mendix and how I would design it differently had I followed my BI best practices and understood Mendix as well as I do now. Hopefully this comparison will help others who develop in Mendix when trying to optimize their application and look at each step more critically.

An application I helped develop (and continue to do so) is a massive Purchase Card Transaction Management System that manages the workflow of approvals within a department for all personnel that use Purchase Cards (Credit Cards owned by the company) and posts the history of notes, approvals, and other data necessary to SAP to allow the payments to the bank to go through. Without getting into great detail on the application, the key purpose for developing this app was to replace the paper process in use today and allow the reviewers and approvers much needed insight into where a transaction and it’s associated receipt was at any point in the process. As you can see from this high-level description, there are numerous features required in the application, and as such numerous requirements for accountability of the process.

One of those requirements is to monitor how long a transaction sits in a workflow queue, or in other words, how long a Reviewer or Approver leave something in their ‘Inbox’ before completing their value-added part of the process. In the process of learning a new technology like Mendix and AGILE methodology at the same time, I completely lost track of my BI foundation and didn’t gather requirements in the procedural way I always do. For some reason, my mind wasn’t thinking that the process shouldn’t change due to the technology. I knew what they wanted to measure (time in queue), the unit of measure (days), and one attribute of aggregation (Role). I became myopic and designed a solution that only met the requirement without thinking of the bigger picture.

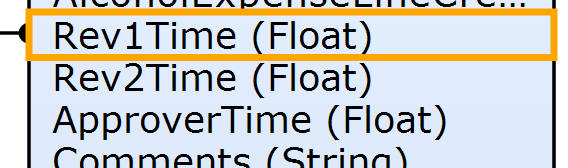

First, I needed to create the attributes to store the time data, which in this case I chose the ‘Expense’ entity because I only needed to know an aggregate of the three roles’ (Reviewer1, Reviewer2, and Approver) times per Expense. For clarification in this app, ‘Expense’ has a one-to-many relationship to ‘Workflow’. I set the attributes up as ‘Floats’ because as a result of the unit of measurement requirement of ‘Days’ I knew I would calculate partial days and therefore need the decimal.

This approach led me down a path of inflexibility. I would have no way to calculate the queue times of an individual person in the process. Additionally, what if the roles changed or I added new ones? I now had individual attributes that would have to be modified. And across Departments, the workflow might be entirely different. I was building the application to treat data as configurable per Department but I had developed a completely inflexible domain model when it came to reporting.

Next I developed the microflows that updated these queue times. Even though I shouldn’t be updating these unless there is a change to the Workflow which is actually the driver of queue time change, I actually pass in the Expense because that is where the attributes are located, then retrieve ALL Workflow objects, loop through to find the ones that match the role I am concerned about, aggregate them to a variable as a running total, and then update the Expense attribute accordingly. <Headslap>.

Before I get any harder on myself, I must explain two things. This was one of the very first processes I ever developed in Mendix on my own and it achieved the goal. Remember, achieving the goal is paramount as you can always come back and optimize later. I was able to give the users a report out of the system that answered the business question of how long expenses were sitting in the various role’s queues. I could easily have skipped this posting entirely and let you think I always have the right answer rather than expose my poor early designs, but I tend to learn more from my mistakes than my successes so hopefully this will be true for some of you as well and help you get it right the first time.

Let me explain what I SHOULD have done (and will do when this story becomes a priority!):

This all goes back to gathering good requirements. Needs change and therefore requirements are fluid. As a subject matter expert (SME) you are supposed to think about the big picture. I failed to do so and developed a solution that, while it works for the immediate need it fails to scale for future needs. When developing a metric, you need to ask a few key questions (I’ll provide answers to this example queue time metric for clarification):

- What are we measuring? – Queue time

- What is ‘queue time’ (the Start time and the End time)? - Difference between a Workflow (entity object) Created Date and Changed Date (leverage the Entity defaults to capture those)

- What is the unit of measurement (seconds, minutes, etc)? - Days

- What triggers change (when do I update it)? – Anytime a Workflow Object is changed.

- Are there exceptions to what constitutes sitting ‘in-queue’? – In this example, no, but you need to get as many of these on the table up front as early as possible so you can discuss, decide, and develop for them. Examples that could impact this might be if a Workflow object is created as part of an email sent that you might not want that time counted depending on whom is identified as the owner of that Workflow object.

- What is the output? –For now it is simply part of an Expense ‘Export to Excel’ data dump. There are plans to create reports on this metric in the near future but other features have taken priority. They key is that they have access to the queue times so prettying them up is just not a priority for now. Don’t focus on this early on just get the data captured.

Had I been thorough I would have quickly realized a few key differences from what I designed. Workflow, not Expense, triggers the change. That one critical piece of information changes the granularity of the capture location of the data in the domain model. I should have designed the application domain model like this:

In the ‘Workflow’ entity, create one attribute named ‘QueueTime’ as a calculated field that calls a microflow to calculate ‘daysBetween’ CreateDate and ChangedDate and store in ‘QueueTime’. This would allow me to aggregate that time by any of the attributes in the Workflow object as well as the Expense. Workflow owners, Status enumeration values, etc. The model is flexible to handle whatever I throw at it.

Had I done that, I wouldn’t have needed the inefficient microflow that loops through data and all kinds of other nonsense. While the logic works, it is completely inefficient. From a reporting perspective, in my ‘Report’ module (separate from the App module), I could create an entity, persistent or not depending on how I want to use the data where I would build microflows that use Retrieve Lists constrained by Xpath to the role I want on the Workflow object and List Operations to filter and aggregate as needed for the reports, such as Document Template sourced reports. If that isn’t clear because I’m glossing over this too much, please leave some feedback in the comments and I’ll update the posting with an example of what that would look like. The key is that I wouldn’t have to create attributes specific to each type of metric like I did to begin with (Rev1Time, Rev2Time, etc.) Roles should be configurable, and therefore capturing the time should be based on whatever was configured in the data of the Workflow object not predefined as a model object as it is currently.

I hope this example of functional yet poor design and the description of how I plan to fix it helps others evaluate what they are working on as well. This isn’t necessarily specific to just Mendix but could be applied to any scenario that requires getting that domain model correct. Be rigorous in you requirement gathering and critical towards you solutions and your tenacity will pay off in improvements in your own efficiency and proficiency.